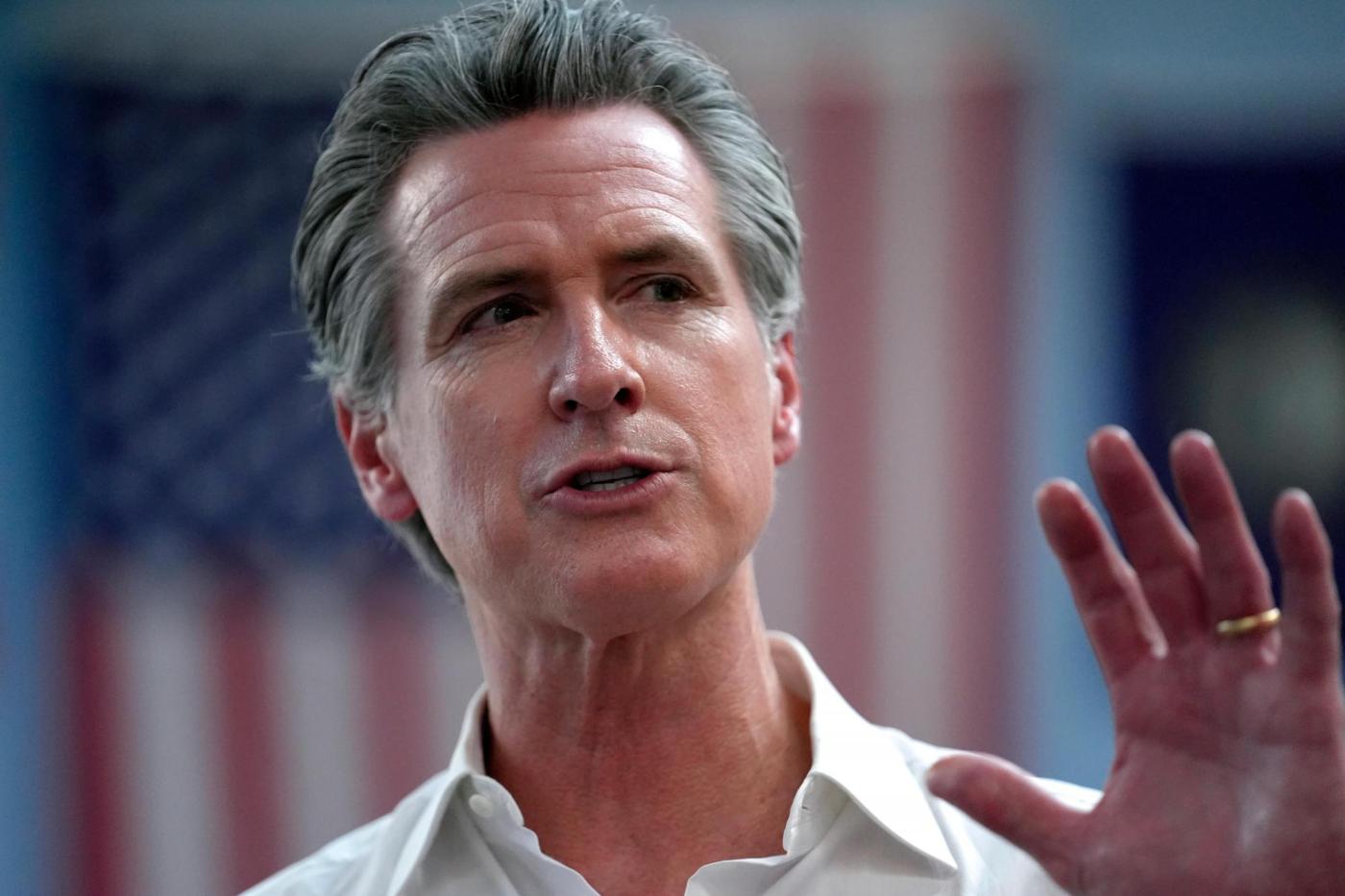

Governor Gavin Newsom is clapping back at Elon Musk for reposting a video with AI generated fake audio of Vice President Kamala Harris, by vowing to pass a state law cracking down on voice manipulation in campaign ads.

It’s the latest beef between the two clean energy allies turned political foes. Last month, Musk said he would move SpaceX’s headquarters from Hawthorne to Texas after Newsom signed a law preventing school districts from alerting parents if children change their gender identity.

Earlier this week, Musk reposted a fake campaign video that uses an AI version of Harris’s voice to joke that Biden was senile and she was incompetent.

Newsom then posted a screenshot of an article about Musk circulating the video and wrote, “Manipulating a voice in an ‘ad’ like this one should be illegal. I’ll be signing a bill in a matter of weeks to make sure it is.”

Musk issued a retort of his own saying, “parody is legal in America.”

Their online fracas underscores the tension legislators face between regulating AI in the election and upholding First Amendment rights to freedom of expression.

A spokesperson for Newsom’s office did not specify which law Newsom was referring to in his X post, but said his concerns would be addressed in existing AI regulations working their way through the legislature.

One key challenge with these legislative efforts is determining what content they apply to, said Sarah Roberts, professor of information studies at UCLA.

“The problem with what Musk did is that it wasn’t really a campaign ad, it was a fake video, so it’s unclear if what Newsom is talking about would address that,” she said, referring to the fact that the video is a parody and not official campaign material.

“If Newsom is saying voice and video manipulation of candidates should be banned, that’s a much broader brush,” she said. “That would actually mean that what Musk circulated would fall into that category.”

She said the problem is that the broader the regulation the greater risk of First Amendment pushback. However, she added, this should not be enough to hold back efforts to regulate the use of AI around elections.

“If we don’t, in fact, do something like that, I think the consequences are pretty clear as to what we can expect to come, which is the entire information landscape around elections being untrustworthy and useless,” she said.

Related Articles

Donald Trump says Kamala Harris, who’s married to a Jewish man, ‘doesn’t like Jewish people’

Kamala Harris spent her political career supporting immigrants. As vice president, it got more complicated

The Democratic contest to be Harris’ running mate will likely be decided in the next week

Young voters energized by new generations on the presidential tickets

How Harris and Trump differ on artificial intelligence policy

There are two key bills state legislators have proposed to address the use of AI in elections. Both have passed the state Assembly and are awaiting approval in the state Senate after the summer recess ends.

Asm. Wendy Carrillo, D-Los Angeles, authored AB 2355, which would require campaign advertisements to issue a disclosure if they use any AI generated audio or visual content.

Asm. Marc Berman, D-Palo Alto, authored AB 2655, which requires that large online platforms block the posting of “materially deceptive content” related to elections. It would also require certain content be labeled as “inauthentic, fake, or false” during a time window around elections.

“AB 2655 will ensure that online platforms restrict the spread of election-related deceptive deepfakes meant to prevent voters from voting or to deceive them based on fraudulent content,” Berman said in a statement about his bill. “Deepfakes are a powerful and dangerous tool in the arsenal of those that want to wage disinformation campaigns, and they have the potential to wreak havoc on our democracy by attributing speech and conduct to a person that is false or that never happened.”

While the video circulated by Musk could, with a degree of common sense, easily be interpreted as a joke, there are more nefarious ways AI can be employed in elections, Roberts said.

For example, she said, AI could be used to generate a fake “leaked audio” recording of a candidate saying offensive or damning statements.

“This is actually a bipartisan issue,” said Roberts. “I think the sooner that our elected officials at the state or the federal level realize that they are all threatened and could all find themselves on the wrong side of the manipulated video, the sooner we will see a united front trying to figure out how to address this.”