About a year ago, a coyote snuck into a chicken coop at Meredith Morris’ Seattle home and treated itself to a feathery dinner. Unsure how to break news of the fowl fatality to her children, Morris turned to artificial intelligence to find the right words.

Morris, the director and principal scientist for human-AI interaction research at Google, took some creative liberties in prompting a generative AI program to eulogize her chicken. She wound up with the script to a play memorializing Amelia Eggheart — and an itch to further explore how AI could be used to commemorate the dead.

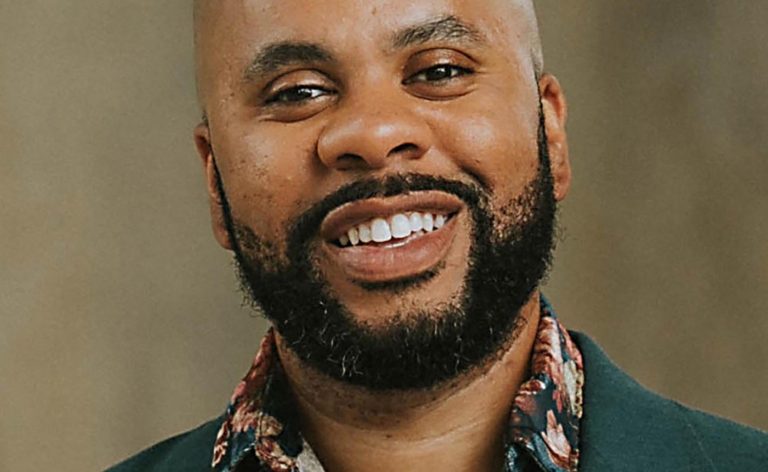

“What she sent me was hysterical,” said Jed Brubaker, a University of Colorado Boulder professor and co-researcher with Morris. “It was a stage play with lighting directions that opened with, ‘We gather today to honor the memory of Amelia Eggheart.’ That was how we started talking about what was going on in this space.”

“Generative ghosts” — AI chatbots based on the data of the dead — are a focal point of Brubaker and Morris’s research. They just received $75,000 in funding from Google to study how best to use AI to keep our dearly departed loved ones alive in the digital sphere.

“We anticipate that within our lifetimes it may become common practice for people to create a custom AI agent to interact with loved ones and/or the broader world after death,” Morris and Brubaker wrote in their latest paper, “Generative Ghosts: Anticipating Benefits and Risks of AI Afterlives.”

Brubaker has studied the intersections of death and technology for the past 15 years. After his grandfather died, he wondered whether, instead of scrolling through his Facebook memorial page, what it would look like if he could sit down and have his grandpa tell him through virtual reality about the stories flooding the social media platform.

With the genesis of generative artificial intelligence, which can create stories based on prompts and data, Brubaker knew it was only a matter of time before people start uploading information — emails, data held in cloud storage, digitized journal entries, text messages, social media posts — of their late family and friends in order to create chatbots that not only know personal information about the deceased, but can mimic their speech.

If that all feels a bit dystopian, Brubaker said it’s an inevitable outcome of our technological prowess and that research can help guide how to most ethically and practically proceed into this macabre multimedia realm.

“There will be data sets and people will use AI to understand those data sets, and unless someone is really insistent that all their data be deleted, a lot of whether we end up in a ‘Black Mirror’ scenario or not has less to do with data and more to do with how it is being used and how are we representing it to people,” Brubaker said, referencing the dark British TV series that often spotlights technology gone rogue.

But programs using AI to let people talk to digitized versions of the dead already exist:

Seance AI boasts “our revolutionary AI technology recreates the essence of individuals who’ve left our world, giving you the opportunity to have heartfelt conversations with them through a user-friendly chat interface”

With Replika, you can create your own AI companion “who is eager to learn and would love to see the world through your eyes”

In re;memory, “you can find solace in expressing your love and forgiveness, creating a bridge to the cherished moments you hold dear”

And then there’s the Dadbot of several years ago in which a son documented his experience giving his dying father artificial immortality

As AI and its capabilities become increasingly mainstream, Brubaker and his fellow researchers are studying the most suitable user experience for communicating with an artificial representation of a deceased person.

For example, if Brubaker were to upload his grandfather’s data and ask the chatbot what his grandpa’s favorite color was, should the bot respond with “His favorite color was green” or “My favorite color is green”?

“Does the ghost represent the person or reincarnate the person?” Brubaker said.

They’re not yet sure.

As part of their research, Brubaker and his team work with the general public but also people who have lost loved ones, death doulas and those in palliative care.

Ashley Harvey, a former grief counselor and current Colorado State University professor who teaches a course called “Death, Dying and Grief,” said she can see potential upsides and downsides for the ghostly chatbots.

Everyone grieves differently, she said.

Within the past few decades, Harvey said American culture has shifted toward having a continuing bond with dead loved ones rather than an insistence on moving on quickly. Harvey could see a generative ghost becoming an avenue to keep that bond alive.

“Then, at the same time, there are some tasks that grievers have to accomplish, and that’s accepting the reality of the loss, experiencing the pain of the loss,” Harvey said. “If a generative ghost disrupts that process — if we aren’t really accepting that our loved one has died and not really experiencing the pain or adjusting to the world without them, then we might worry a little bit.”

Harvey proposed the idea of a ghostly chatbot to her undergraduate students. While she presented it positively as an interesting development in their field, she said her students reacted negatively.

“They kept using the word ‘humanity,’ ” Harvey said. “There’s not the humanity or soul or spirit in the bot, and they thought it could be confusing or interfere with grief.”

Brubaker acknowledges there could be misuses or inappropriate features that might escalate grief. Push notifications from the great beyond, for example, seem intrusive.

“When people are bereft, there is a really important principle in grief literacy that people get to be in control of when and how they’re engaging in painful memories,” Brubaker said. “It’s nice to have a scrapbook of old memories, but you get to go to the bookshelf and pull it down when you want to.”

Related Articles

Is nuclear power making a comeback? And could it even happen in California?

AI is having its Nobel moment. Do scientists need the tech industry to sustain it?

Google scientists share Nobel Prize in chemistry for AI protein work

Former Google engineer and ‘Godfather of AI’ Geoffrey Hinton among Nobel Prize winners in physics

Will schools start using AI surveillance cameras with facial recognition? Some already are

However, he said knee-jerk reactions against the technology should indicate it’s an idea worth exploring more.

There is a movement in grief literacy, Brubaker said, to recognize that isolation and repression of grief is harmful and that deciding to mourn for a set period of time and then move on does more harm than good.

Plus, people have always talked to and memorialized the dead, Brubaker said.

“When we think about this from a clinical standpoint, if that time spent using the technology is persistent and disruptive and maladaptive then that could be a problem,” Brubaker said, noting that’s why he cares about the user experience. “It’s really important to me that the user or person who is interacting with the generative ghost be the one who is in control.”

The Google grant will go toward studying questions like whether a generative ghost should be static or evolve over time and when it would be appropriate for the ghost to take some kind of action — like a notification — or remain passive.

“There are entire companies and industries popping up, and we can stick our heads in the ground or figure out the right way to do it.”